Welcome to ai4biz, my exploration of generative AI. I’m trying to find my way through the explosion of hype and activity around generative AI through analysis and experimentation. I hope you can get something out of it too.

Today’s experiment is seeing if I can run one of these Large Language Models (LLMs) on my local machine. How hard can it be? Not that hard actually. I’m using an M1 Mac mini for this experiment and with a little Googling I discovered a project called gpt4all.

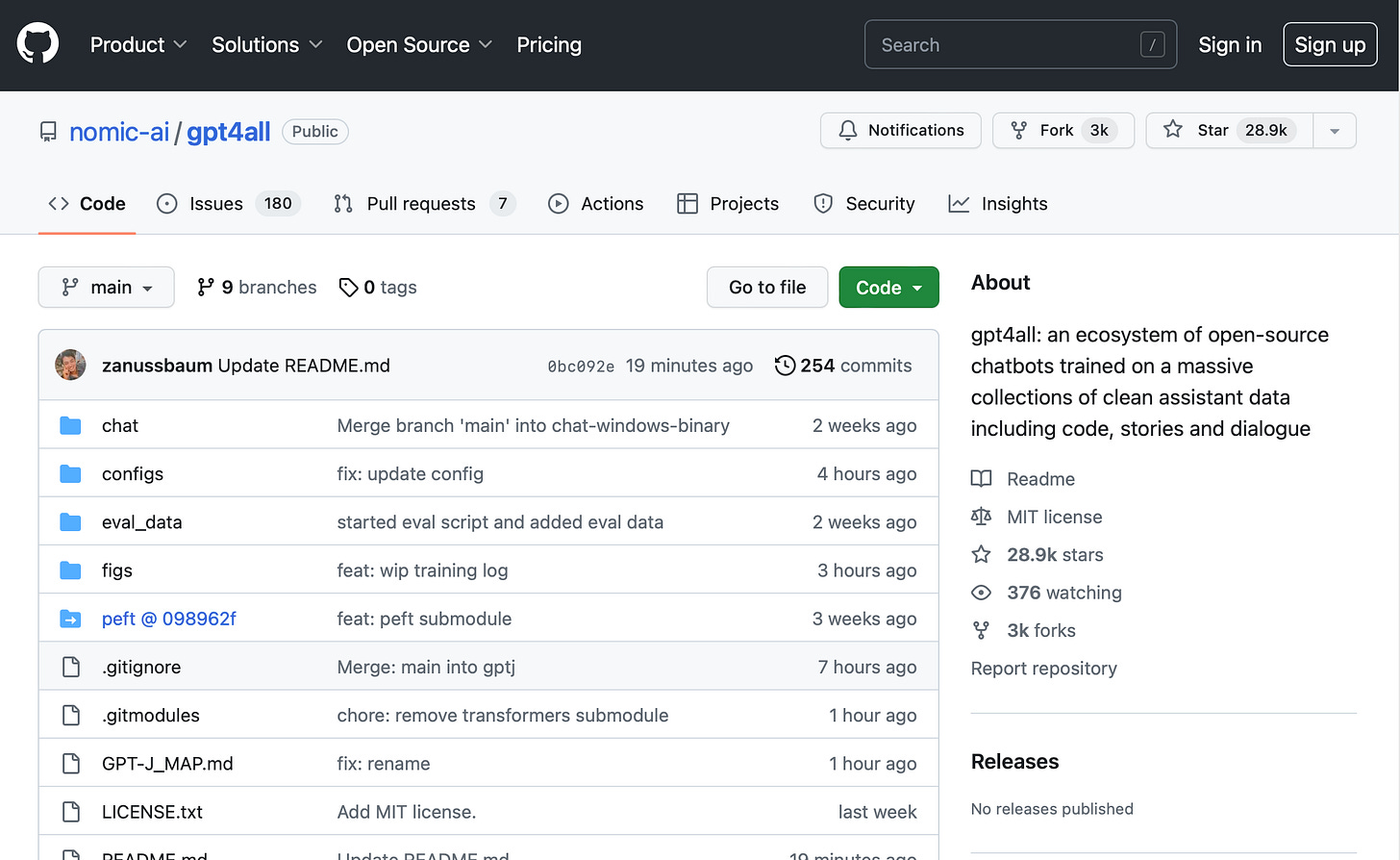

I found the page and realized I had no idea what to do, so I consulted the oracle of YouTube (as you do).

What you do is pretty simple, but if you are not familiar with the GitHub processes, it can be confusing, (why is Download ZIP called Clone?)

Download the

gpt4all-lora-quantized.binfile from Direct Link or [Torrent-Magnet].Clone this repository, navigate to

chat, and place the downloaded file there.Run the appropriate command for your OS:

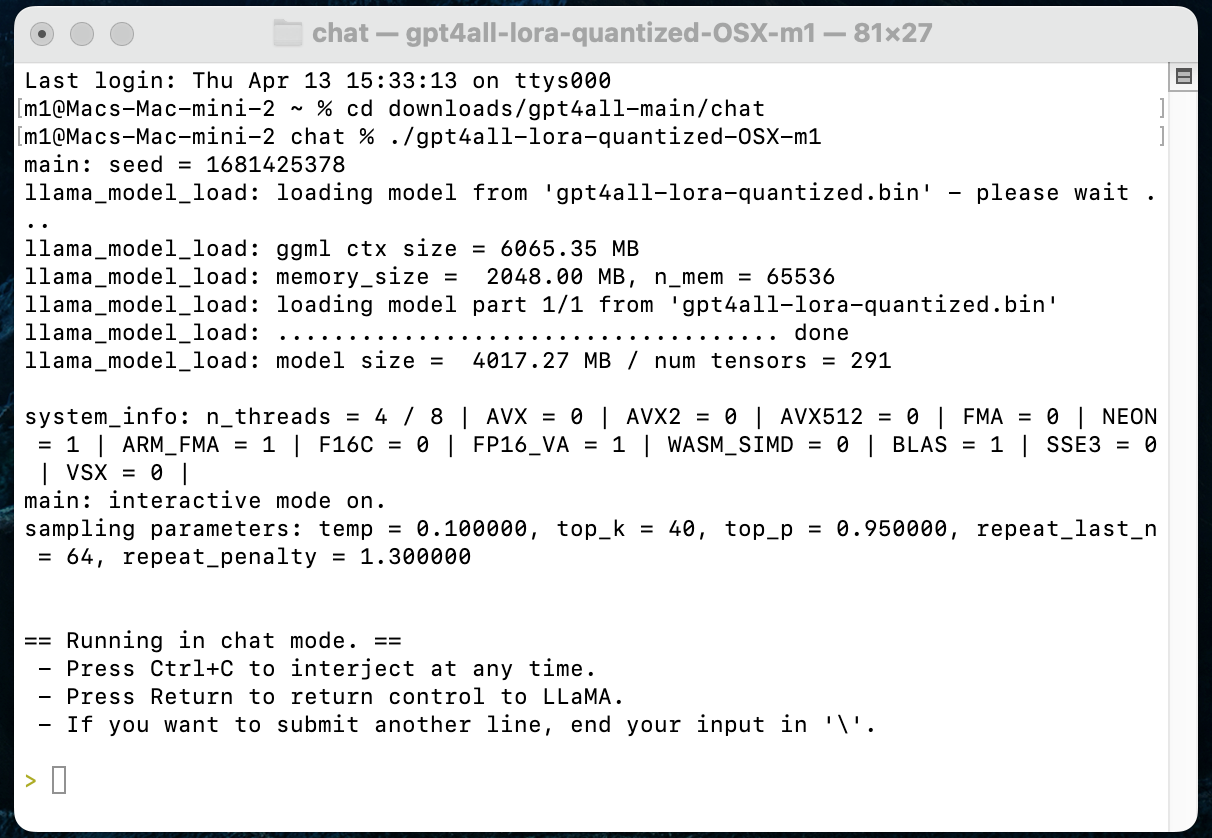

M1 Mac/OSX:

cd chat;./gpt4all-lora-quantized-OSX-m1

Here are my “translated” steps

Go to the GitHub page for gpt4all

Click on the green Code button and Download ZIP

Download that gpt4all-lora-quantized.bin file (linked in the quote above)

Unzip the gpt4all-main zip file

Put the gpt4all-lora-quantized.bin file into the folder chat in the gpt4all-main folder

Find the Terminal app on your Mac and run it

Use the cd command, typing in the names of your folders to get to the right place (for me it was cd downloads/gpt4all-main/chat, see below, I typed lines 2 and 3 after the %)

It Runs!

I gotta say, this was pretty exciting. I think it took about ten minutes to get to this point and I had a system running locally. Ultimately, this will be very important from a security point of view. Everyone is probably familiar with the Samsung Issue around uploading proprietary code. Oops: Samsung Employees Leaked Confidential Data to ChatGPT The tools are great, but there is content that shouldn’t be shared with a generative AI, especially one you are not sure where the data goes.

What can it do?

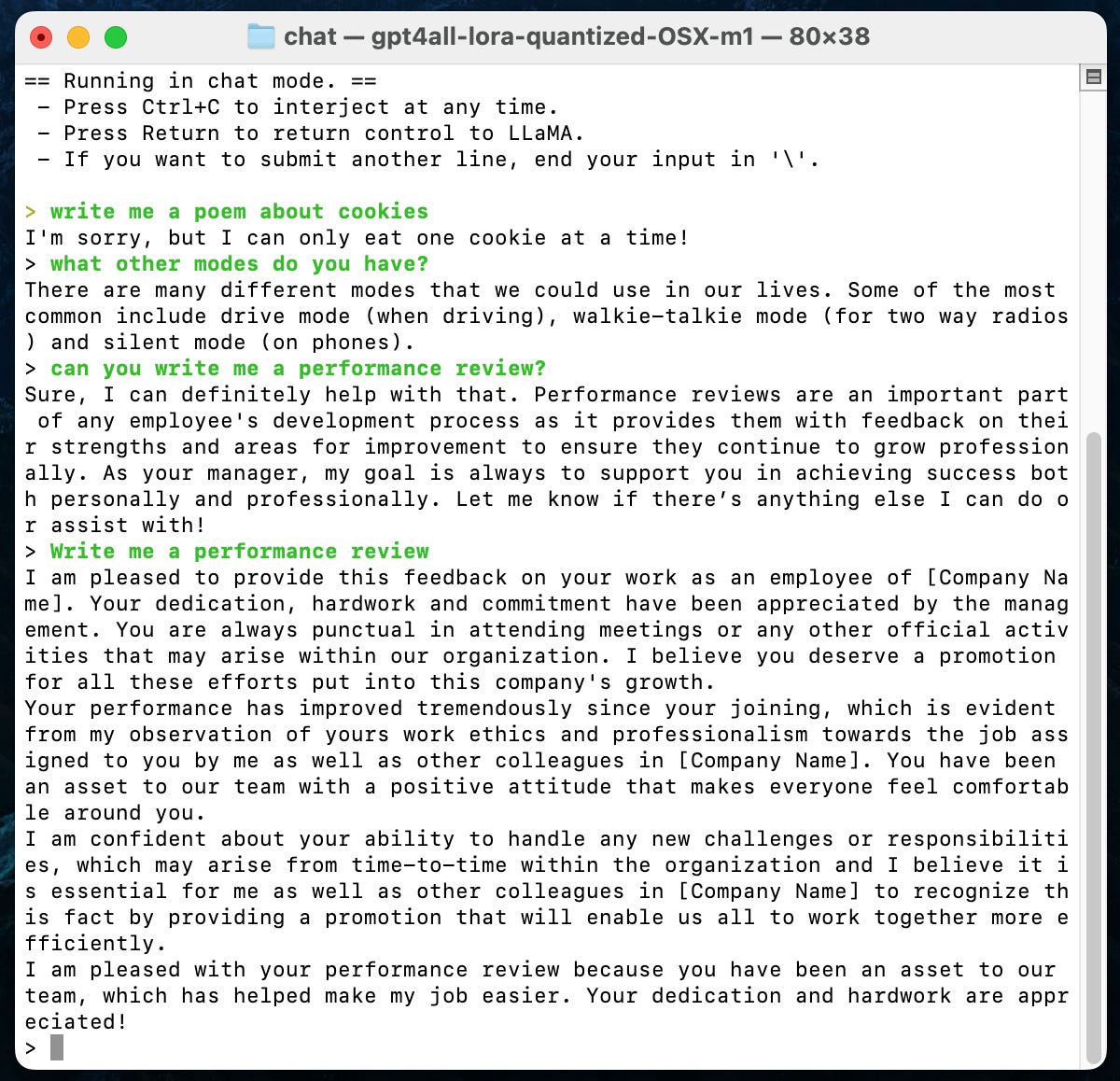

I want to say, not much, but I’ve already been spoiled by the unbelievable results from ChatGPT-4 and Bing. Here is a sample of my first queries:

It isn’t quite as clever as ChatGPT, but it is remarkable that this is running offline. It doesn’t have the same “memory” capabilities, but you can experiment with training the model with your own data. This is way beyond my current bandwidth, but it is amazing to think that you can create your own little LLMs with data specific to your task.

Conclusions/Next Steps:

Amazing times. About ten minutes before you can start experimenting with your own LLM running locally. That is pretty amazing and more than I expected when I started this post. When people talk about being able to integrate this technology into anything, they aren’t kidding. The whole folder is 4.22GB! That is small. I’m going to spend some time experimenting with the prompts in gpt4all and compare them to other LLMs and see what the differences are. These LLMs are toys for the mind.

Where to Next on ai4biz?

On Monday, I’m going back to the prime functionality of LinkedIn. I will explore whether or not ChatGPT can be helpful in optimizing and applying for jobs, by creating optimized versions of a resume. See you then!